In my previous article, I listed the companies involved in the making of Avatar, as well as the tools used. Now I intend to bring more detail and show some of the technical challenges and innovations that helped shape the world of Pandora and its inhabitants.

While people think of visuals as being the biggest achievement of Avatar, it would be more appropriate to call it immersion – the feeling of being there, without any distracting clues that the world and its characters are computer-generated.

Bringing characters to life in a convincing manner is a daunting task. Final Fantasy – The Spirits Within was the first to try and they failed spectacularly. The reason is psychological: while the brain can interpret a simple stick figure as a human, as the complexity of the increases, perceived realism improves until a point where the character is almost – but not quite – alive. Such a character looks real but dead. This dread area is called the Uncanny Valley. After the Final Fantasy flop, few have ever attempted to cross the Uncanny Valley; although LoTR’s Gollum is often cited as a realistic digital character, he was supposed to look repulsive, so the challenge wasn’t that big.

Performance capture

James Cameron wanted to create beautiful, sexy characters you could fall in love with (and judging from various talks on forums, it seems that Neytiri had that effect) and for this, rough motion capture that is usually employed was not enough.

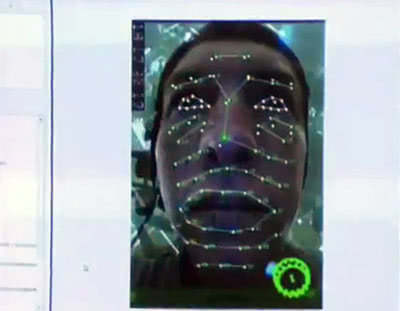

Cameron and his team coined the term performance capture – that is capturing all the nuances, body language and feelings of an actor and translate them on the digital counterpart. For this they used innovative solutions from Giant Studios (that company was responsible for all motion capture) and they added a helmet-mounted camera attached to a rod, that sit in front of the actors’ heads; this way, the facial expressions could be captured along with the whole body motion. The helmets also featured ears and braids, helping actors remain aware of them. In addition to that, they used up to 12 HD cameras to capture the actors from different angles in wide and closeups to preserve all the fine details.

This approach helped the animators at Weta to concentrate only on bringing up every nuance of the original performance, like small winks, swaying of the hair or movement of the ears. It was also a boon for actors, now freed of tedious details like markers, lighting and costumes, now being able to concentrate on what they do best – conveying emotions.

They also used motion capture for creatures (using actors for stand-ins), and even for helicopters and banshees. This allowed for a better interaction with the actors and more natural motion paths (especially tricky for helicopters, which sway in their path in a way only a helicopter pilot can describe). The Weta animators still had to refine the motion and add all the necessary details, but the general motion path was already given to them in the way the filmakers wanted.

Real-Time feedback

Everybody has their own camera eye and sensibility and to translate that through an intermediary is very difficult

Another problem of working with CGI-heavy pictures is that the actors play in an empty space and when standing on the floor it’s difficult for everyone (actors, cameramen, director) to imagine how the end result will look like. So James Cameron and his team devised a “virtual camera” hooked to Motion Builder, allowing them to see the virtual environments in real-time.

“Everybody has their own camera eye and sensibility and to translate that through an intermediary is very difficult“, said Rob Legato, referring to the traditional workflow that required the animators to handle camera movement, with variations taking weeks to complete.

For Avatar, they would move the camera in the empty set and the virtual camera would do the same inside the simulated environment, all while actors movements were being captured and applied to their digital models. This way, instead of doing a scene with actors, having the motion interpreted later and receive an animation weeks later, the production team could see the results instantly, providing them with the essential feedback needed to refine the details. This approach allowed them to stop using storyboards and just experiment. For camera work alone, they could try 15-20 variations – wider, lower – to get the best look.

We gave Weta what was essentially a rough videogame version of the scene

When a scene was ready, it was given to Weta and they replaced the low-res models with high-res ones, did the tweaks and small details and rendered it.

Another clever thing was the Simulcam – a rig that allowed them to do real-time compositing over green-screen. So they’d film actors in areas that required digital extensions (for example the hangar bay) and the image in the viewfinder would show the composited result, with the digital backgrounds instead of the green screen. Needless to say, this is a huge help for people behind the camera, now able to see how the scene is going to look like and how to frame it.

Attention to detail

The creatures started as sketches on paper and were then digitally sculpted with Z-Brush. Vehicles were modeled with Maya, XSI and 3ds max.

For the avatars, Stan Winston Studios built full-size models of generic Na’vi male and female characters that were laser-scanned and tweaked. Incorporating features from the human characters was a challenge in itself as the avatars had to have some resemblance to their ‘operators’. Moreover, a face conformation too different from the actor’s face did not translate well for face performance capture, this is why the Na’vi ended up looking quite similar to the actors portraying them.

Speaking of performance capture, to fully retain all the nuances of the body language, the Weta artists had created all the face muscles, fat and tissue and used state-of-the-art shaders and light models to give the sense of depth beneath the skin, with the usual sub-surface scattering model replaced with a sub-surface absorption one.

The modeling and texturing of the models and characters had to go through several update cycles, because as the one character model was made more realistic, all other CGI near it started looking obviously fake and had to be improved as well.

For the vegetation, Weta created a library of almost 3000 separate plants and tress, that enabled them to “decorate” the Pandora jungle in a realistic fashion. By the way, initially the vegetation was supposed to be cyan, but the jungle was too alien, so they bough back some green for a more familiar look.

Further reading

You can find out more about the companies and software involved in the making of Avatar. Weta did most of the work, but even they were overwhelmed by the sheer number of CG scenes, so received help from ILM for the animations involving humans’ aircraft and from Framestore for the Hell’s Gate compound.

PS: Congratulations on winning the Golden Globes Award. Despite its detractors, I believe Avatar is strong film both on technical and human level.

14 Responses

great article,

thanks a lot for this. i was wondering how they got the skin to look so great.

also the movement of the lips over the teeth

and compression when the characters grab and kiss.

amazing artwork…

a sub-surface absorption layer, interesting.

this is proprietary technology i assume..

(pity :P) but it makes sense.

ps any knowledge about it being used differently among characters.

(for instance jake’s avatar seems more “translucent” then neytiri’s in the above shot)

it could be lighting, but it seems the shader is different.

another thing for me are the enviroments and plants, they are wonderfull,

not only by design but the rendering quality s of the chart…

i first believed they were actually filmed,

they had me fooled…

in any event…

this movies artwork amazes more and more every time.

hat’s off to all who worked on it!

CAN u tell or explain some points about how can i perpare skin in photoshop n some important points about photo shop

You already have a plugin to do things like compresion in Maya

check

http://kickstandlabs.com/site/stretchmesh/stretchmesh-features/

Or, just use the one that comes with Maya.

Weta Digital would have come up with their own compression tool in their rigging pipeline, so they can go in there and continually improve/refine tool specifically to how they want in not just Avatar but any subsequent movie projects they’ll be working on. So it’s a better investment for them to come up with their own most of the time.

Wait till DVD’s or Bluray comes out, and you’ll see that they even went extra miles to create all the tiny muscle and tendon twitches on neck and all other body areas, which audience won’t see until they see it frame by frame…but it’s there to up the whole realism of each Nav’i 🙂

Different look in between Na’vi characters can vary on the following reasons:

1) Different stage of production ~ some of the shots that were rendered as early as back in mid/late 2008 might have less tweaked shader changes to renders at the end of 2009, which would have no doubt be a more mature tweak.

2) Variation of environment ~ According to the setting of environment (bright sun, gloomy day, rainy day, in the open, midst of jungle…etc.), Lighting TD’s will set different IBL’s as well as other lights positioned/angled around characters accordingly to that particular shot, hence will be affecting overall skin tone and translucency of any character and varies in between shots.

3) Different Lighting TD’s ~ There’s over 100 Lighting TD’s worked on Avatar, so it’s hard to keep consistency throughout shots…even within sequence where you ideally want lighting continuity from shots to shots, there can be slight difference in tone simply because each lighting TD’s have their own technique and experience to put into their lighting shots. However most of these can be amended/controlled by CG supervisers’ directions and of course James Cameron’s perfectionist eyes…and last but not the least…final comping can tweak and fix any major difference in tones between shots.

Above reasonings are just my personal guessing, so don’t take it too literally as the right answer 😛

Anyway a great article that sums up overall technical accomplishment of Avatar!

What was the main render engine for the scenes? Was it Pixar Renderman? And is there any more info on how weta built the animals? When you talk about the various internal layers, were they ALL modeled like how muscles are, or was it some other method? Or were they used as subcutaneous layers for rendering?

Weta and ILM used Renderman.

The scenes with the human base (Hell’s Gate) were done by Framestore, not sure what they used (someone mentioned Houdini).

My understanding is that they created a whole muscle system for some of the animals and Na’vi faces.

thanks for the quick reply on the rendering. I can believe the use of Houdini for the muscles, it has a great system for visualizing muscle mass and attachment (including realistic muscle jiggle and the like), but it’s really long-winded to set up for as you have to describe so much in the process.

Anyway, I really appreciate this article cause it’s so hard to find out the production aspects of animation behind the scenes for those interested in the industry.

that movie is like so good

like it wos like real to me

i do not no what to say

but wow

if i avr head a shot be one of thos Avatar’s that would mak my day

it would be alot of fun doin it

yea but it would be alot of work but at the sam time it would be fun as will

i seend the movie like now 8 times so far and i really love it

its to the pont that i spak like a Avatar

that is what it taks to be a akter and i can evein fak cry alot so yea i hop to see a nother

Avatar movie

I’m happy that you loved the movie, but please try to use proper spelling and grammar next time, OK?

Wooow

i am a student at Film ant Television Institute of India,pune (INDIA)

i found this informatin very useful……….

please update more information.

thanks………………

Hi,

I really wanted to ask if there were any concept ideas of the navi made in silicone and finished to a high standard? As i am intending on making one for my final year project at university and need a industry context and evidence of this to show to my tutors, fi you could help in any way that would be fantastic.

Thanks,

Lucy

I love Avatar. The movie was visually stunning as well as a great sound show. This reply may be a bit late but I just wanted to know how they rendered everything to be so realistic. I’ve heard that they used this renderer called Pantaray that was devloped by Weta digital and Nvidia. But what I really want to know is how they got the Na’vi to look so damn realistic and not just the Na’vi but every other creature and plant in Avatar. If anyone has some resource or information about this it would be appreciated if you could share it with me. Thanks

Aaron

Comments are closed.