I’ve been pondering over writing this article for a very long time, unsure about public interest since cloud backup solutions are ubiquitous now and simple to set up. However, in the light of recent cloud failures, I figured it’s time to share my 20+ years of experience in backup for small companies.

Why? Because there is no cloud, only someone else’s computer

OK, technically, that’s not true. When Amazon first introduced Elastic Cloud Computing in 2006, they had a specific model in mind – the key point being the elastic part. This means that users could quickly scale the resources up and down based on demand, e.g. adding more server instances before Black Friday and then disposing of them when not needed. This kind of elasticity was indeed revolutionary for companies, as they no longer needed to provision expensive hardware ahead of time.

But of course that “cloud” quickly became a buzzword, like A.I. or blockchain, and I’m sure there’s a company out there offering blockchain-based AI “in the cloud”.

So when it comes to “cloud storage”, it just means that your data is stored somewhere on a hard drive in a data center. Why should that concern you?

First there are legal implications. If you’re based in the EU and store personal customer data, you have to think about the protection rules on international data transfers. Or say that you’re in the US. Can you be sure your data is not stored in a datacenter in China, unencrypted? How would you know? Then there’s always the risk of your account being compromised. It happened to Hollywood celebrities using the iCloud and it happened to cyber security specialists.

More worryingly are the failures of the providers themselves. Over the past 10 years, there have been numerous instances of big cloud providers dropping the ball. In April 2011, Amazon’s entire US-East region crashed. Many top sites were unavailable for hours, despite being configured according to Amazon’s guidelines for high availability. In the same year, a mind-boggling bug in Dropbox allowed users to log in with any password. And if you think stuff like that only happened in the past, just the other day (November 28, 2023) Google users started complaining that Google Drive is deleting their documents. Google issued an update about a week or two later, allowing users to restore the files from a backup.

Finally, it’s the capacity, speed and cost. This is more difficult cu gauge, because the requirements vary from one user to the other. A business owner who needs to store contracts and invoices has different requirements from a wedding photographer, while a videographer has additional needs. 5-15 GB may be more than enough to keep a bunch of Word and Excel files, but for someone doing 4K video editing it would be completely inadequate.

Fine, I’ll do it myself

First, we need to build our very own “local cloud”, which is a fancy word for a NAS – Network Attached Storage, which is nothing more than a small server with some hard drives.

What you need:

- A UPS (uninterruptible power supply) or SPD (surge protection device)

- A NAS with two or more bays

- Two or more hard drives

Protection

Some sort of surge protection is essential, because in my experience power fluctuations are the number one hard drive killers. SPDs are quite cheap. A decent Tripp Lite costs $15 on Amazon. If your home/office is new, it may already have a permanent surge protection installed. I noticed there are power fluctuations near construction sites, but an SPD will help even in the case of a lightning storm.

A fancier solution would be a UPS. UPS devices have built-in surge protection and can also power your devices during an outage. Since routers and NAS devices don’t require a lot of power, a relatively small UPS can keep the essential devices running for hours. There are many brands to choose from and you can find a decent UPS at around $100.

At Media Division, we use two separate internet providers and the router can balance the load between them. The fiber optic media converter, router, switches and the NAS server are all powered by a UPS.

Storage

Now, you should decide how much storage space you need. While most cloud storage providers tout 2 TB as their top/premium offering, if you choose to build your own storage, 2 TB serves as the entry level and it’s not even cost-effective. You can acquire a 2 TB drive for $74 and a 4 TB one for $80.

Let’s say you decide that 4 TB is enough. You’ll need at least two identical drives. Why? Because redundancy. Even with surge protection, drives can fail (fortunately, if you use a reputable brand and model, such events are very rare). You need to ensure that such an event is nothing more than a minor inconvenience, so setting up a RAID is essential. RAID stands for Rapid Array of Inexpensive Disks. While its history is fascinating and complex and could better explain the choice of words (including “inexpensive”), it’s beyond the scope of this article.

Suffice to say that you’ll set up your disks in a RAID 1, RAID 5, or RAID 6 configuration. RAID 1 is the simplest: there are two sets of disks, each one a mirror of the other. Every time data is written, it’s done on both. When reading data, the system decides where’s read from. If the system detects an inconsistency, it will attempt to repair the data automatically. If one drive fails, it can be hot-swapped: you just pop out a drive and put in a new one without shutting down the NAS, and the system will automatically rebuild the array. Pretty neat! So, RAID 1 requires an even number of disks. RAID 5 is more complex and more space-efficient, but requires at least three disks. It can recover failures from one disk. RAID 6 can recover from two failed disks.

In terms of brand, I recommend WD Red. They are specifically designed for NAS devices and are built to run reliably for years. Seagate Ironwolf are good, too. You can get 2 WD Red 4 TB drives for $160.

Don’t bother with SSDs. They are more expensive, and network lag will nullify their speed. It’s also more complicated to determine their lifespan and storage capacity based on their write cycles.

NAS

This is the most important part. NAS devices are basically small servers with a web interface, running Linux underneath. There are two brands I recommend: QNAP and Synology. I have experience with both and overall each one has its strengths and weaknesses: QNAP has more powerful hardware; Synology has a nicer and easier interface. If you don’t know which one to choose and don’t have any specific requirements, go with Synology.

The entry level would be either Synology DS 723+ or QNAP TS-262. Both have two drive bays to put your WD Reds in them and cost about the same: $400.

Both companies have more sophisticated solutions, even with 16 bays for over 100 TB of storage or for specific scenarios.

I would stay away from smaller NAS solutions from companies such as WD, Zyxel, and others. They had many incidents over the years.

Cost efficiency vs the cloud

The more space you need, the better you are with the local solution because local and cloud scale differently:

The hardware costs for 4 TB of storage would be around $600. For comparison, 5 TB from Google costs $250/year, so you’d recoup your investment in two years and a half.

If you opt for, say 8 TB drives, you’d pay $700 upfront vs. $500/year for cloud; you’d get your investment back in one year and a half.

For 32 TB you’d use 3x16TB drives in RAID-5 and a $600 NAS for about $1300, compared with $1500/year for cloud, so it’s instantly cheaper.

This doesn’t even take speed into account. How much time would it take to upload or download 1TB over the internet? What about data transfer caps from your internet provider?

In terms of electricity usage, a 2-bay NAS uses 10 to 20 W, depending on operation modes. You can put them to sleep at night. Assuming 15 W typical power usage, 10 hours a day would make 55 KWh per year or about $8.

Access security

Do not let your NAS be available on the internet. Both Synology and QNAP (and likely all others) offer some form of “connect from anywhere” feature. Don’t use it. Let me be clear: your NAS should only be accessible from your LAN, behind the router’s firewall. All major brands have experienced hacking incidents, leading to users discovering their devices locked by ransomware.

But isn’t limiting your storage solution to the local network a significant drawback? No, because the next step is to use a VPN.

Any decent router supports one or more VPN types. The best kind is IPSec VPN, also sometimes branded as site-to-site VPN. It’s pretty cool, albeit depending on the router brand it might be a bit more difficult to set up. Several routers in different locations can be set up to form one large network (each location can use a different subnet). Therefore, if you have two offices, they can access one NAS. For users, it’s seamless—they don’t even notice or care whether a resource is physically on-premise or not.

A solution for individual devices is OpenVPN. You set up OpenVPN in the office router, and then you can connect to it from anywhere. There are OpenVPN clients for all major platforms. You connect and gain access to the LAN, allowing you to interact with the NAS. There are other VPN protocols that are widely supported, like L2TP or PPTP, but they are not considered secure.

While there are other physical security considerations, they are case-by-case. Both Synology and QNAP allow you to encrypt the drives if needed. Of course, encryption somewhat reduces the performance.

You can then set up users, roles and rights on the NAS and you can share the NAS’ resources according to your needs.

Synchronizing, Versioning, and Backing Data up

Now with the hardware in place and with the basic security set up, it’s time to get to the fun part.

One thing to keep in mind is to think of layers, or defense in depth. Remember the classic engineering adage: “if something can go wrong, it will”. You have to think what can go wrong, how bad it would be and how to mitigate it.

What follows is not “the” way to do things. You may skip some steps or add new ones, depending on your budget, how much data you have and how important it is.

Synchronizing workstations

The first step is to protect files from workstation crashes and accidental deletion. These are, by far, the most common issues.

You and the other users can keep working on their laptops, while the files are saved on the NAS. You’d set up a shared folder on NAS, say Company_Docs and have a program sync the changes. There are many solutions, and both QNAP and Synology have their own sync clients, but I really like one free program called Free File Sync. Free File Sync can do two-way sync between folders and it’s very fast, because it can use multiple threads to transfer many files at once, a very important feature when you need to sync a large number of small files.

Sync programs can run on schedule or real-time (the file is synced as soon as it’s saved). Don’t use real-time syncing. The most common scenario is that someone inadvertently deletes a document, or overwrites it by mistake or (less common) the file somehow becomes corrupted. While a deleted file can be easily restored, an overwritten or corrupted one can’t be. If you have a daily sync routine set, say every day during the lunch break, then you can easily get the file back from the NAS, and it would be at most a couple of hours out-of-date.

Versioning / Snapshots

What if a file goes missing for a long time without anyone noticing? Or you need to find last year’s revision of a document?

Modern NAS devices can do transparent block-level versioning. If you set up a shared folder on the NAS for snapshots, the system will take a snapshot of that folder every day or hour and will keep the past 256 (actual number depends on several factors) snapshots. The snapshots contain only the difference from the previous version, so they are very space-efficient (block-level versioning means that for larger files, the system keeps track of the different areas in a file, rather than the whole file).

File versions can be accessed by the Windows users via the “Previous version” context menu item. This way, you can easily check an old version of a document that was overwritten months ago!

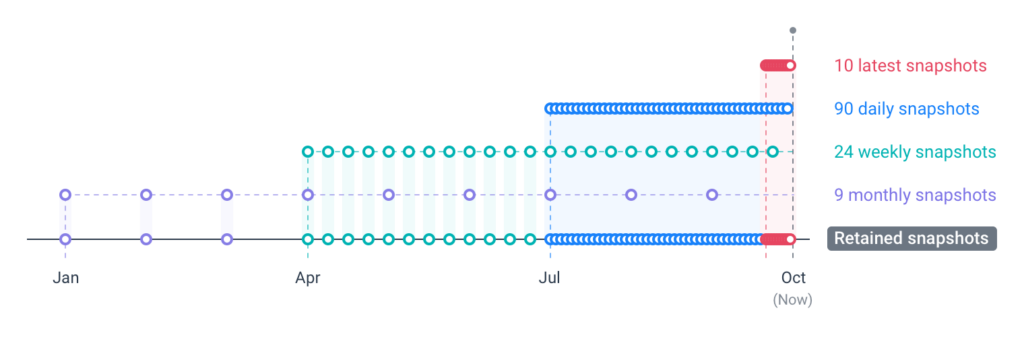

This transparent versioning system saved my back more times than I could count. Our snapshot system is set to keep 256 previous versions as daily snapshots for the past 90 days, then weekly snapshots for the past 52 weeks, then monthly backups for the past 10 years. For every file or folder, I can easily go as far as I need back in time to find what I’m looking for.

This feature is also perfect for ransomware protection.

Backing up a remote server

You can use your NAS to back up the data from remote web servers. This is slightly more complex, because at this time there is no nice user interface on the NAS for this. Remember that your NAS is essentially a small server running Linux. You can connect to it and set up a cron job to back up data from a remote server using rsync. If you don’t know what I’m talking about but need the feature, let me know and I’ll expand in a later article.

Offsite syncing

For even better resilience, you can install a second NAS offsite and have the two sync each other. This may be overkill in some scenarios or really useful in others.

If you work from home and high-availability come hell or high water is not a priority, you don’t need it. On the other hand, if your company has two main offices connected through IPSec (site-to-site) VPN and the employees need fast access to large files, having a NAS in each office makes sense. The two devices can sync their content so that the users always access the files from the local LAN.

This adds another layer of protection: even if the NAS in one location is physically destroyed, all data is still available in the other location.

Archiving

So far we only discussed live data. The next step is to have an archiving system. Even with versioning, files can become corrupted without anyone noticing for months. A ransomware like Ryuk can actually wake up sleeping devices in the local network to encrypt files on their drives and since it runs with user privileges, if you can write to a file on the network, so can the malware.

My approach is to have an Archive folder for completed projects, invoices, emails, and other files that need to be accessible but not modified in any way. The Archive folder is shared as read-only for all network users. To archive a file or folder, users are instructed to place the files on the NAS in a toArchive folder or whatever. Then a cron script would periodically compress the folder and move it to the Archive. QNAP can automate this process with an app called QFiling, but it’s quite easy to write a small script for this purpose.

This way, the Archive will contain files and folders (further organized into categories) that are read-only for the users and can’t be altered by malware or deleted by mistake.

Remember, not everything needs to be archived, just important, completed stuff.

Offline backup

I’ve used various storage media over the years, starting with magnetic tapes for my ZX Spectrum (yes, I’m that old). I used everything from 360K 5.25″ floppy disks to Blu-Rays.

As the amount of data we need to back up gets larger, cost-effective offline backup solutions become scarcer. On the other hand, you don’t need to store everything, just the essentials.

Media choices

Magnetic tape backups remain the “professional” option, but also the most expensive. It’s around $500 for a tape drive and $100 for a 50 TB tape (and you need at least two). Personally, I’ve been using CDs, DVDs and Blu-Rays for years. An external writer the discs are cheap enough ($100 for a Verbatim external Blu-ray writer). The problem is that even 50 GB is not that much nowadays and the reliability is not that great (never ever use RW discs, they are really bad).

As I mentioned, you don’t need to keep offline backups of everything, because it would be impractical. For relatively small data sets, one can use SD cards. A Sandisk Extreme Pro 128 GB SD Card costs only $20. What I like about SD cards is that they are inexpensive and can be write-protected, so data can’t be deleted accidentally. They should last for many years, especially when not constantly written. USB memory sticks, SSDs or external HDDs can be used as well, the only thing that I don’t like about them is that there’s no easy way to make them write-protected.

A few more considerations about what to choose: external HDDs are great, but somewhat fragile, you won’t want to throw them in your backpack and they can be affected by magnets. They do work nicely even after years of storage, though, and even if damaged, you can usually recover some data. Solid-state storage (SSDs, cards, memory sticks) are stronger since they have no moving parts, less electronic components and are not affected by magnetic fields, but if they fail, the data is generally unrecoverable. Whatever you choose, make two copies. Remember – two is one and one is none.

| Name | Pros | Cons | Writer | Price per TB |

|---|---|---|---|---|

| Magnetic tapes | Can store large amounts of data. Lowest price per TB. | Quite slow. Difficult to restore from. Requires specialized hardware. Affected by magnetic fields. | $500 | $5 |

| Optical media | Write-once. Relatively simple storage req. | Not as ubiquitous as before. Average reliability. | $100 | $60 |

| SD cards | Can be write-protected. Very easy to read or write. | Expensive for large amounts of data. | $140 | |

| USB Thumb drives / SSD | Ubiquitous. Fastest. | No easy way to write-protect. | $50 | |

| HDD | Low price per TB. Easy to connect via USB. | Affected by magnetic fields. More fragile. No easy way to write-protect. | $15 |

Compressing data

Unless you back up photos (including DNG) and video, you should compress the files to save space. Use a solid compression scheme. Solid compression means that files are concatenated first and then compressed, resulting in higher compression rates. This is how “tar.gz” archives work. WinRar and 7Zip can do solid compression as well. One thing I really like about RAR is their recovery record. For a 3-5% increase in archive size, some redundancy is added, allowing you to recover even seriously corrupted archives.

Solid archives without recovery records cannot be repaired, so why 7Zip doesn’t have this feature built-in is a mystery to me. You can mitigate lack of recovery record with a 3rd-party solution, but the tools are old and require extra steps.

Routine

How often you back up to external, offline media is up to you. It can be weekly or monthly or whatever, but it’s important that you have a routine. Personally I only back up company emails once per year, at the end of December. They’re just not that important to warrant more care. More important stuff is archived monthly.

The process can be automated, so the moment you plug in an external drive to the NAS, the data from a specific folder (e.g. Archive) is automatically copied to the drive. I prefer to do it manually.

Conclusion

So there you have it. The solutions presented here should scale from a home office to a small to medium company. What are your thoughts and experiences with data backups?